GPTs feels like magic, and this is true even for the folks who created them. Let’s peek into the kitchen and see what goes into creating a GPT. By the end of this post, you’ll be able to explain to a friend how a GPT is trained fairly accurately.

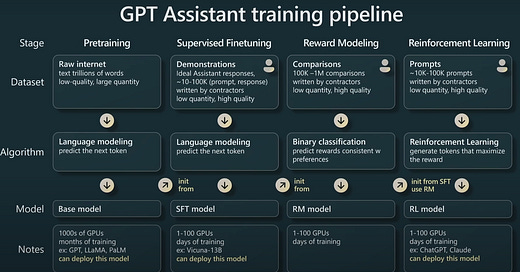

The following schematic and most of this post is distilling what Andrej Karpathy of OpenAI shared in his keynote at Microsoft Build 2023 [1].

A complex recipe. Let’s break it down to how we bake a pizza.

Layer 1- The base

Selecting the base is the start of the bake. Thick crust, thin crust, cauliflower…each choice leads to a different version of GPT.

There are 3 main variations to a fully baked GPT - Pre-training, Supervised Finetuning (SFT) and RLHF (includes Reward Modeling and Reinforcement Learning). Each variation results in a different flavor of model.

→ Pretraining takes ~99% of the time/compute and spans across months. Only the Metas and Open AIs of the world do this.

→ The other 3 stages all fall under the ‘finetuning’ umbrella. In contrast to pretraining, these stages only take hours/days and build on top of the pretrained model.

Pretraining results in a “base model”. It is very powerful at a lot of things and needs to be tamed to please the humans.

SFT and RLHF result in nice well-behaved assistants like ChatGPT.

Layer 2- The sauce…

Slather some sauce on top of the base. Here the sauce is the…data. I’m digging this analogy now.

Each training stage takes in different datasets and different objectives.

→ Pretraining datasets are typically 100s of GB of internet text data including Common Crawl, Reddit, code bases etc. The model learns to predict next token based on what it sees in the training data.

→ SFT datasets are examples of input-output pairs, to teach the model its purpose in the universe. These examples are typically human curated and labeled on a specific task, which each qualifies the model for the ‘supervised’ and ‘fine-tuned’ labels.

→ RLHF datasets are where the model is taught good-behavior from okay/bad-behavior based on human-feedback on model generated outputs. Eventually, the model leans towards good behavior.

Layer 3- The toppings

Next, let’s add some toppings, i.e. the training algorithm and training objecive.

Each model variation is trained on it’s corresponding dataset with corresponding objective, each resulting in a pizza in its own right.

→ For Pretraining, the raw transformer model is trained to predict the next token, based on the universal set of raw text it sees during training.

→ For SFT, the pretrained model is again trained to predict the next token, albeit constraining its predictions to mimic the human-labeled task-specific dataset.

→ RLHF is the garden-veggie variant where a ton of toppings go on top of the pizza.

First, a Reward Model entails giving the model self-assessment powers to be able to score the quality of its own outputs.

Second, a Reinforcement Learning model generates text that maximizes the score predicted by the reward mode.

The result is a well-behaved model that mostly impresses but also gets annoying when it answers questions with “As a large language model trained by Open AI…blah blah”. This is the RLHF-ed garden-veggie pizza.

Alright that was baking a GPT - hope that satiated your appetite.

P.S. Do you think I have RLHF-ed my baking analogy?

References

“State of GPT”, Andrej Karpathy