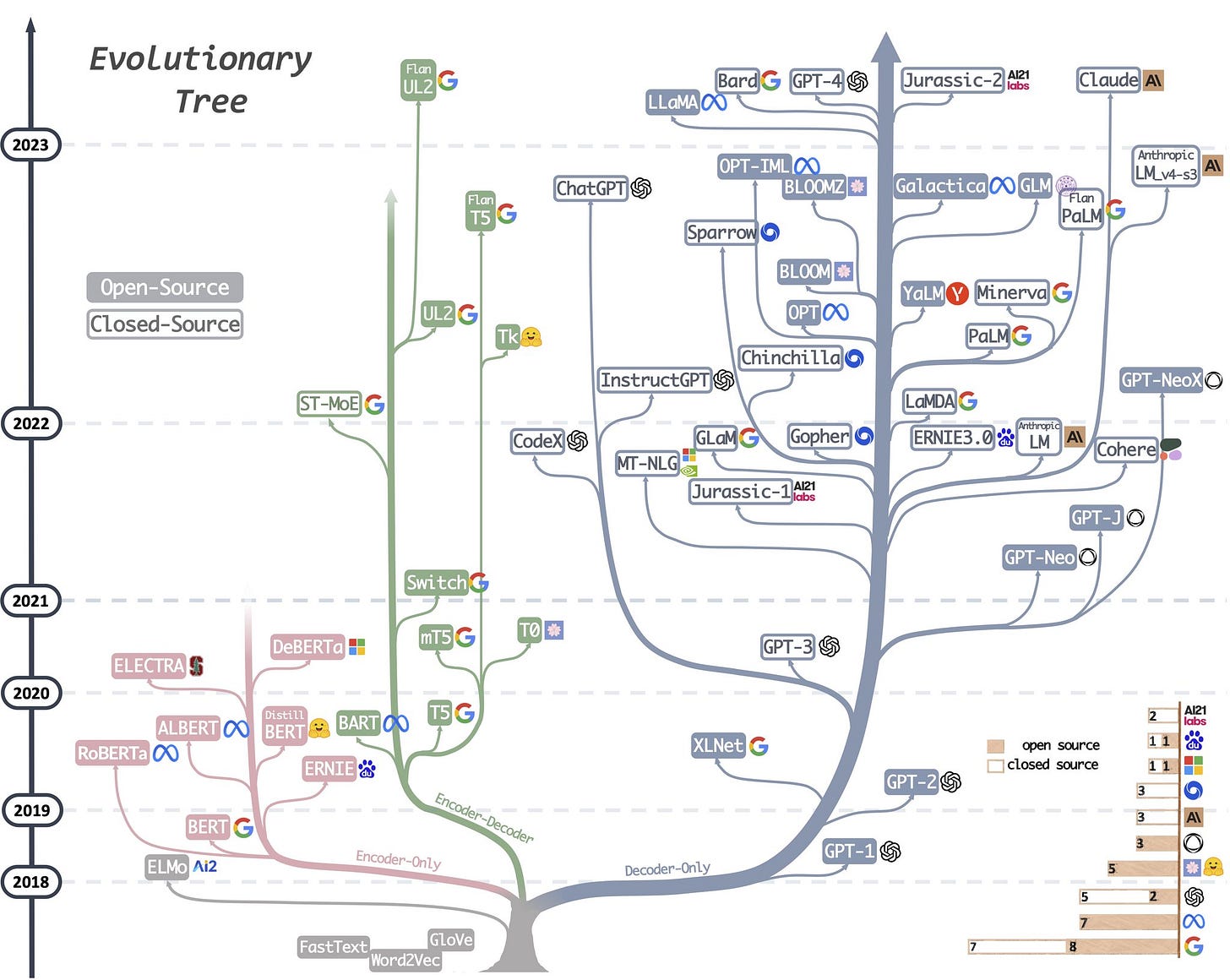

The following evolutionary tree from this survey paper captures the explosion in LLMs since 2021, and clearly shows 3 branches - encoder-only, decoder-only and encoder-decoder. So what are they?

Yann Lecun, Meta’s Chief AI Scientist referenced this tree and added some context on terminologies in this tweet.

Clearly, this is a hot mess, so let’s break it down. For a short explanation on what auto-regressive means, check out this glossary item.

At a high level, in the context of language models, an encoder turns a text sequence into a numeric representation. The decoder can retrieve human-readable task-specific outputs from the encoded representation.

Now, referencing the figure above, let’s

Encoder Only models like the BERT family comprises of an encoder that processes the variable-length input sequence, such as a sentence, and converts it into a fixed-length context vector. This context vector carries the semantic meaning of the input sequence, which can be used for various downstream tasks.

For example, in sentiment analysis, the encoder processes customer reviews and generates embeddings that capture the sentiment expressed in the text. These embeddings are then fed into a decoder classifier to determine whether the sentiment is positive, negative, or neutral. Since the outputs are not generated token by token, its not an auto-regressive decoder.

Encoder-Decoder: A setup where an input sequence is processed by an encoder to generate a context vector, and then this context vector is used as the initial state of a decoder, which generates the output sequence token by token in an auto-regressive manner. The decoder is responsible for predicting the next token in the sequence based on the previously generated tokens and the context vector.

Machine translation (like translating between languages) is a classic use case for the encoder-decoder architecture. Here, the encoder processes the input text in one language and generates a context vector that encapsulates its meaning. This context vector serves as the initial state for the decoder, which then generates the corresponding translation in the target language. Each token in the translation is generated based on the context vector and the tokens generated before it, capturing the linguistic nuances of the translation process.

Decoder Only: Clearly, these models are the largest branch in the evolutionary tree, and shown surprising effectiveness in a wide range of tasks including the ones mentioned above. Decoder-only models (like GPT2,3,4) still need to first encode the input sequence into embeddings that represent both the token ids as well as their position in the sequence, and ultimately produces an output sequence token by token in an auto-regressive manner.

For instance, in story generation, the model is provided with a prompt or a starting sentence. The decoder then generates the subsequent sentences one by one, considering both the evolving context of the story and the grammar and style of the language. This approach allows the model to produce imaginative and contextually relevant narratives.